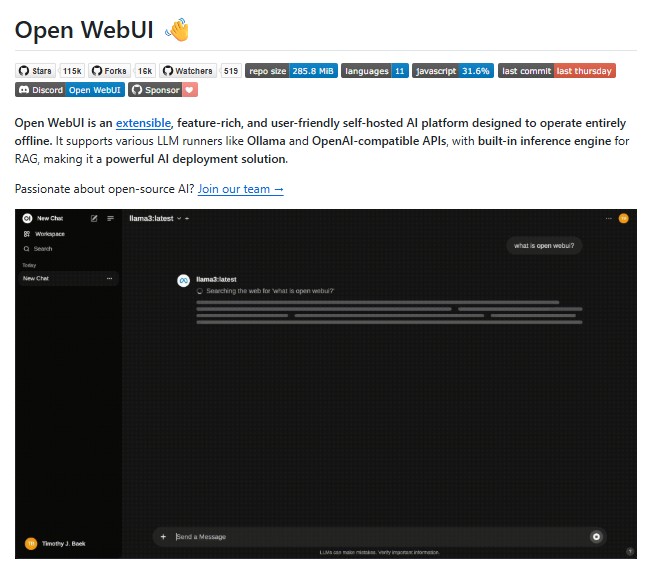

In the rapidly evolving landscape of artificial intelligence, the ability to run large language models securely and efficiently has become a major priority for developers, enterprises and privacy-focused users. While cloud-based AI services are convenient, they rely heavily on remote servers, internet access and third-party control. This is where Open WebUI stands out as a game-changing solution. Open WebUI is a powerful, open-source, self-hosted AI interface designed to run locally, giving users privacy, control and flexibility.

Built to support models through Ollama, OpenAI-compatible APIs, and native inference engines, Open WebUI has quickly become one of the most feature-rich and trusted local AI platforms. From multilingual support to advanced RAG pipelines and role-based access control, it is engineered for both individual experimentation and enterprise-grade AI workflows.

This article explores what makes Open WebUI one of the best AI platforms for self-hosting and how you can get started with it.

What is Open WebUI ?

Open WebUI is a flexible self-hosted interface that allows users to run AI models locally or connect to external LLM providers such as Ollama, OpenAI, Groq, Mistral and LM Studio. It functions as a central hub for interacting with language models, processing text, generating content, performing RAG-based retrieval tasks and integrating custom code through plugins and pipelines.

What distinguishes Open WebUI from many other interfaces is its fully offline capability, its enterprise-ready security model and its professional user interface designed for seamless everyday use across desktop and mobile.

Key Features of Open WebUI

Offline and Self-Hosted Operation

Open WebUI was built with privacy and autonomy as core principles. Users can operate completely offline without sending any data to external servers. This makes it a preferred platform for sensitive environments such as research labs, government agencies, educational institutions and private developers.

Powerful Model Support

The platform supports a wide range of AI model runners including:

- Ollama for local model execution

- OpenAI API and compatible third-party providers

- Local inference engines for RAG workflows

- Access to community and custom models

Users can load and switch between multiple models maximizing flexibility in AI tasks.

Integrated RAG and Document Processing

Retrieval Augmented Generation (RAG) allows models to reference documents, improving accuracy and context. Open WebUI includes:

- Document upload and library

- Inline document search using hashtags

- Local embeddings and indexing

- Web content import into chat

This makes it ideal for research, customer support bots, internal knowledge assistants and automation systems.

Pipeline and Plugin Support

Open WebUI enables Python-based extensions where users can add custom logic, functions and integrations.

Popular use cases include:

- Function calling

- Usage analytics

- Language translation

- Content moderation and filtering

- Custom automation agents

Enterprise-Grade Features

The interface includes robust business-focused functionality:

- Role-Based Access Control (RBAC)

- SCIM 2.0 provisioning for Okta, Azure AD, Google Workspace

- Secure user groups and permissions

- Custom theming and branding for enterprise plans

Installation and Setup

Open WebUI offers multiple installation methods depending on your environment.

Install via Python pip

pip install open-webui open-webui serve

After installation, access it in your browser at:

http://localhost:8080

Install with Docker

For systems running Ollama locally:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway \ -v open-webui:/app/backend/data --name open-webui --restart always \ ghcr.io/open-webui/open-webui:main

For GPU-enabled environments, simply use the CUDA-tagged image.

All-in-One Install with Ollama Included

CPU:

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data \ --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

GPU:

docker run -d -p 3000:8080 --gpus=all -v ollama:/root/.ollama -v open-webui:/app/backend/data \ --name open-webui --restart always ghcr.io/open-webui/open-webui:ollama

Who Should Use Open WebUI ?

Open WebUI is ideal for:

- Developers building AI tools locally

- Enterprises seeking secure AI deployments

- AI hobbyists experimenting with open models

- Researchers handling private datasets

- IT teams customizing in-house AI systems

- Educators creating local AI training environments

Whether you need a personal ChatGPT-like interface without cloud dependency or a fully-scalable internal AI platform, Open WebUI provides the infrastructure.

Conclusion

Open WebUI has positioned itself as one of the leading platforms for local and self-hosted AI workflows. It delivers a polished experience while offering advanced enterprise capabilities and a strong open-source foundation. With support for multiple models, RAG, plugins, role-based access control and offline execution, it empowers users to build high-performance AI systems with complete data privacy and customization.

As artificial intelligence becomes more integrated into everyday productivity and enterprise workflows, tools like Open WebUI will play a key role in ensuring autonomy, transparency and innovation. Whether you are a developer, a research team, or an organization looking to deploy secure AI systems, Open WebUI offers a modern, feature-rich, and community-powered solution.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- Dify: A Powerful #1 Production-Ready Platform for Building Advanced LLM Applications

- LMCache: Accelerating LLM Inference With Next-Generation KV Cache Technology

- Chandra OCR: The Future of Document Understanding and Layout-Aware Text Extraction

- Pixeltable: The Future of Declarative Data Infrastructure for Multimodal AI Workloads

- Meilisearch: The Lightning-Fast, AI-Ready Search Engine for Modern Applications

2 thoughts on “Open WebUI: The Most Powerful Self-Hosted AI Platform for Local and Private LLMs”