As large language models (LLMs) continue to evolve, organizations face new challenges in optimizing performance, accuracy and cost across various AI workloads. Running multiple models efficiently – each specialized for specific tasks has become essential for scalable AI deployment. Enter vLLM Semantic Router, an open-source innovation that introduces a new layer of intelligence to the LLM inference pipeline.

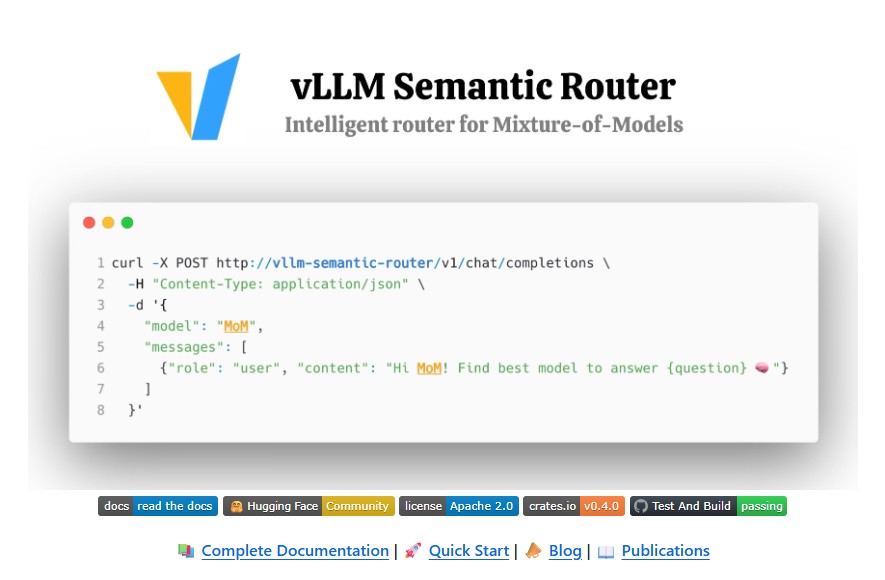

Developed by the vLLM Project, the Semantic Router acts as a Mixture-of-Models (MoM) router that intelligently directs incoming requests to the most suitable model or LoRA adapter based on the semantic understanding of the prompt. Rather than relying on static configurations, it uses AI-driven routing to improve inference speed, task relevance and overall performance.

This blog explores what vLLM Semantic Router is, how it works and why it is a groundbreaking solution for modern enterprises and developers working with heterogeneous AI systems.

Understanding the Need for Intelligent Routing

Modern enterprises often deploy multiple language models for different tasks ranging from question answering and summarization to code generation and reasoning. However, not all prompts are the same. Some require deep logical reasoning while others involve simple retrieval or creative writing.

Traditional inference systems route all requests to a single model, regardless of complexity or domain. This leads to inefficiencies in latency, cost and accuracy. For example, sending a simple classification query to a large GPT-style model wastes computational resources while routing a reasoning task to a smaller model results in poor accuracy.

vLLM Semantic Router solves this bottleneck by acting as a semantic traffic controller, dynamically selecting the optimal model and configuration based on the intent and complexity of each request.

Core Innovations of the vLLM Semantic Router

1. Intelligent Routing Based on Semantic Understanding

At its core, the Semantic Router is designed to interpret the meaning and purpose of each prompt. It uses classification models trained on diverse datasets to categorize requests by intent, complexity and domain. Once the request is classified, it routes it to the most appropriate model or LoRA (Low-Rank Adaptation) adapter.

This is conceptually similar to a Mixture-of-Experts (MoE) architecture, but instead of routing within a single model, the Semantic Router operates across multiple models – each specialized for a different set of tasks. This ensures that complex queries are handled by high-capacity models, while simpler tasks are processed by lightweight models to reduce inference costs.

2. Domain-Aware System Prompts

The Semantic Router automatically injects domain-specific system prompts based on the task category. For instance, if a user’s query is mathematical, it automatically applies a “Math Expert” system prompt; for coding, it applies a “Programming Assistant” system prompt.

This capability eliminates the need for manual prompt engineering allowing consistent and optimized behavior across different domains such as coding, finance, healthcare and research.

3. Domain-Aware Semantic Caching

To further enhance performance, the Semantic Router implements domain-aware similarity caching. It stores the semantic embeddings of previous prompts and their responses. When a similar request arrives, the system retrieves the cached result instead of recomputing it.

This caching mechanism dramatically reduces inference latency and computational cost particularly in high-traffic enterprise settings where similar queries recur frequently.

4. Auto-Selection of Tools

The router also integrates tool selection intelligence. It automatically decides which external tools or APIs to use based on the query. For example, if a prompt requires translation, it activates a translation API; if it involves data retrieval, it uses an information extraction tool.

By filtering out irrelevant tools, the system avoids unnecessary token usage and optimizes tool-call accuracy- an essential factor when working with multi-tool LLM pipelines.

5. Enterprise-Grade Security and Privacy

Security is a central pillar of the vLLM Semantic Router. It includes robust PII (Personally Identifiable Information) detection to ensure sensitive data never reaches external APIs or third-party models. In addition, a Prompt Guard system detects and blocks jailbreak or malicious prompts preventing models from generating unsafe or non-compliant outputs.

Enterprises can configure these safeguards globally or at a category level, ensuring fine-grained control and compliance with privacy regulations.

Architecture and Implementation

The vLLM Semantic Router is implemented using Golang with a Rust FFI (Foreign Function Interface) based on the Candle ML framework for high-performance machine learning operations. A Python benchmarking layer is also available to evaluate routing performance and scalability.

The router seamlessly integrates with Docker, Kubernetes, and Hugging Face models, making deployment easy across both cloud and on-premise environments. Developers can get started with a single command:

bash ./scripts/quickstart.sh

This interactive setup installs all dependencies, downloads necessary models (~1.5GB), and launches the full stack of services, including a dashboard that provides real-time monitoring and analytics.

The vLLM Semantic Router Dashboard

A major highlight of the project is its interactive web dashboard, offering users visual insights into model routing behavior, cache usage and request statistics. Teams can analyze performance metrics, adjust routing rules and debug workflow bottlenecks directly from the dashboard.

Community and Open-Source Collaboration

The vLLM project maintains a strong open-source community on GitHub with over 2,000 stars and nearly 300 forks. Contributors from across the globe actively collaborate to improve routing algorithms, enhance integrations and optimize performance.

The team hosts bi-weekly community meetings via Zoom to engage contributors, discuss feature roadmaps and share research progress. Users can join the #semantic-router Slack channel to collaborate in real time.

The project is licensed under Apache 2.0, promoting transparency, innovation and unrestricted community contributions.

Conclusion

The vLLM Semantic Router represents the next phase in intelligent LLM inference where requests are no longer treated equally, but smartly routed to the model best suited for the task. Its combination of semantic understanding, domain-aware routing, caching and security makes it a powerful solution for organizations seeking to deploy heterogeneous AI systems efficiently.

With strong community support, continuous innovation, and seamless integration with open-source frameworks, the vLLM Semantic Router is paving the way for the future of scalable, intelligent AI infrastructure.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- BERT: Revolutionizing Natural Language Processing with Bidirectional Transformers

- The Transformer Architecture: How Attention Revolutionized Deep Learning

- Concerto: How Joint 2D-3D Self-Supervised Learning Is Redefining Spatial Intelligence

- Pico-Banana-400K: The Breakthrough Dataset Advancing Text-Guided Image Editing

- PokeeResearch: Advancing Deep Research with AI and Web-Integrated Intelligence

2 thoughts on “vLLM Semantic Router: The Next Frontier in Intelligent Model Routing for LLMs”