As artificial intelligence advances, machines are becoming increasingly capable of understanding and responding to human language. Yet, one crucial challenge remains how can machines truly understand the context behind human intentions? This question forms the foundation of context engineering, a discipline that focuses on designing, organizing and managing contextual information so that AI systems can act in harmony with human goals.

The concept of context engineering, explored in Context Engineering 2.0: The Context of Context Engineering by researchers from SJTU and SII-GAIR, reveals how context shapes intelligent behavior. The paper traces its evolution from early human-computer interaction (HCI) systems to modern agent-driven frameworks like large language models (LLMs). By redefining how machines perceive human context, context engineering aims to create more adaptive, collaborative and intelligent systems.

The Essence of Context Engineering

In simple terms, context engineering refers to the systematic process of collecting, managing and using contextual data to enhance a machine’s understanding of human intent. Context is not limited to words or data – it encompasses the situation, purpose and environment in which communication occurs.

Machines traditionally lacked the cognitive ability to “fill in the gaps” that humans naturally infer during interactions. Context engineering bridges this gap by translating human situations into structured, machine-readable representations. It’s essentially the art of converting high-entropy (complex) information into low-entropy (structured) knowledge that computers can understand.

This process goes beyond prompt design or memory retrieval – it’s about building systems capable of reasoning across situations, retaining relevant information and adjusting dynamically to human needs.

The Evolution of Context Engineering

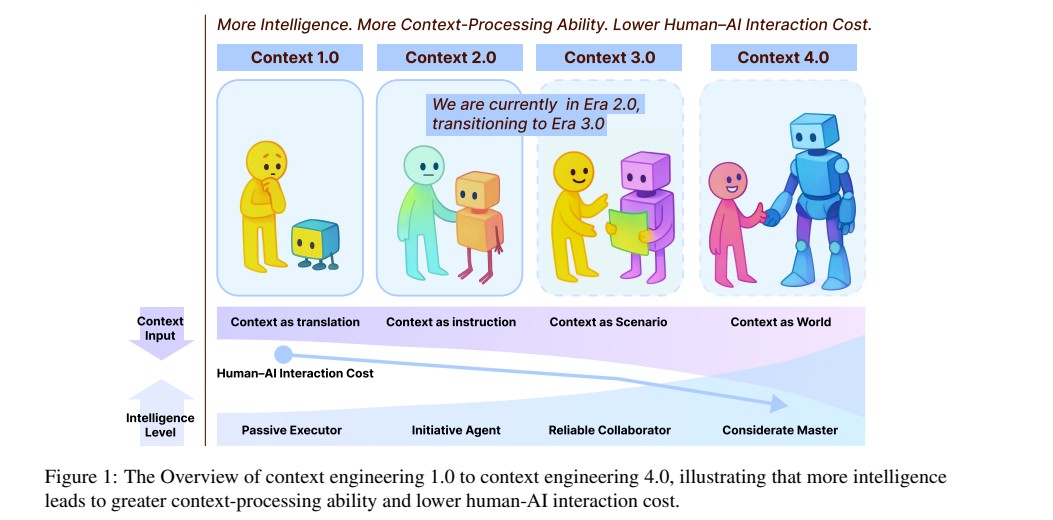

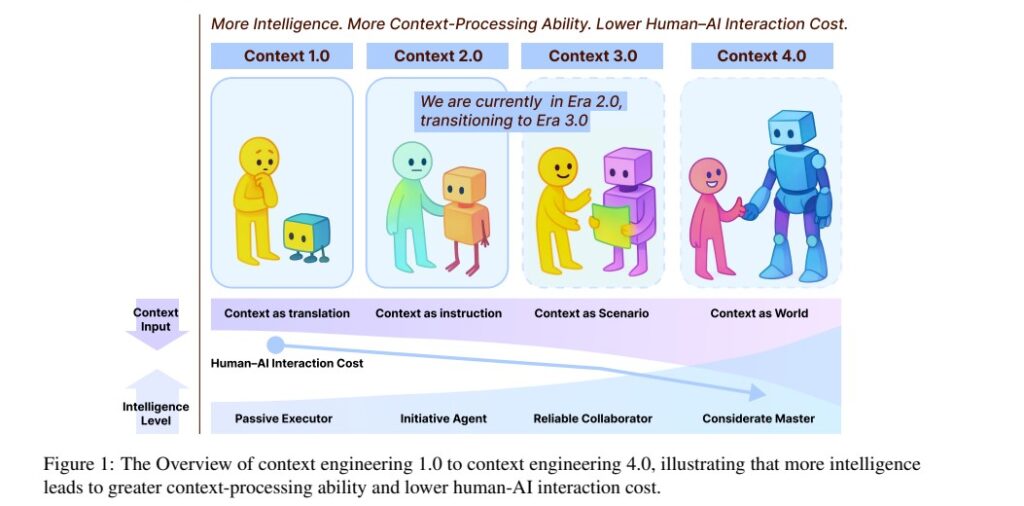

The development of context engineering can be divided into four eras, each corresponding to the growing intelligence of machines.

1. Context Engineering 1.0: Primitive Computation

The first era (1990s–2020) focused on structured, rule-based computing. Early systems relied on explicit, predefined inputs such as menu selections or simple sensor data. Frameworks like Anind Dey’s Context Toolkit (2001) formalized how context could be captured and used, setting the foundation for future advancements. However, machines could not yet interpret natural language or handle ambiguity – human designers still had to “translate” intentions into machine language.

2. Context Engineering 2.0: Agent-Centric Intelligence

The present era marks the transition from static systems to intelligent agents. With the introduction of large language models (LLMs) like GPT-3, machines can now understand natural language, infer meaning and collaborate interactively. Context is no longer limited to fixed commands – it now includes human emotions, workflows and ambiguous intent. Technologies such as Retrieval-Augmented Generation (RAG) and long-term memory agents exemplify this evolution, enabling systems to interpret raw, human-like data across text, voice and images.

3. Context Engineering 3.0: Human-Level Intelligence (Emerging)

In the near future, AI will reach human-level reasoning, capable of understanding social cues, emotions and situational subtleties. Machines will act as “reliable collaborators,” integrating multiple sensory contexts, auditory and emotional into seamless interactions. This marks a major step toward natural human–machine symbiosis.

4. Context Engineering 4.0: Superhuman Intelligence (Speculative)

The final stage envisions machines that go beyond human understanding. Instead of merely adapting to human context, they will construct new contexts identifying hidden needs and generating innovative solutions. In this stage, AI systems will not just assist humans but also inspire new ways of thinking.

Core Pillars of Context Engineering 2.0

The current generation of context engineering is built upon three main pillars: collection, management and usage.

1. Context Collection

Modern systems collect multimodal data – text, images, audio, video and even biometric signals from devices like smartphones, wearables and IoT systems. The guiding principles are:

- Minimal Sufficiency: Collect only what’s necessary for the task.

- Semantic Continuity: Preserve meaning, not just data.

This ensures efficiency and privacy while maintaining coherence across sessions and environments.

2. Context Management

Once collected, context must be structured and prioritized. Context management involves:

- Hierarchical memory organization (short-term vs. long-term)

- Context abstraction (summarizing or vectorizing information)

- Functional isolation (using specialized “subagents” for tasks)

For example, systems like Claude Code isolate functional contexts to avoid confusion, while others like Letta use shared memory to support multi-agent collaboration. These techniques mimic the human brain’s ability to store, recall, and adapt information dynamically.

3. Context Usage

Context engineering enables machines not only to remember but also to reason and infer. This includes:

- Selecting relevant memory for each task

- Sharing information across systems

- Inferring user needs proactively

When context is used effectively, AI becomes a true collaborator anticipating user goals rather than waiting for explicit instructions.

Challenges and Future Directions

As context engineering matures, new challenges emerge. The most pressing include:

- Storage scalability: Efficiently preserving lifelong contextual data without overload.

- Processing efficiency: Handling vast information while maintaining accuracy and low latency.

- System stability: Preventing reasoning breakdowns caused by large, complex context inputs.

To address these, researchers are exploring hierarchical memory systems, lifelong context learning, and context selection algorithms that mimic human attention and memory consolidation. The ultimate goal is to make AI systems capable of lifelong contextual understanding continuously learning and adapting to human evolution.

Conclusion

Context engineering represents the next great leap in artificial intelligence. From early rule-based systems to today’s intelligent agents, the discipline has evolved into a holistic framework for understanding human intent. As we move toward Context Engineering 3.0 and beyond, the boundary between human and machine cognition will continue to blur. Machines will not just react – they will understand, collaborate and co-create with us.

By embracing context engineering, developers and organizations can build AI systems that are not only smarter but also more empathetic, reliable and human-centered. The future of AI depends not only on intelligence but on context.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- OpenAI Evals: The Framework Transforming LLM Evaluation and Benchmarking

- Skyvern: The Future of Browser Automation Powered by AI and Computer Vision

- Steel Browser: The Open-Source Browser API Powering AI Agents and Automation

- Bytebot: The Future of AI Desktop Automation

- Claude-Flow v2.7: The Next Generation of Enterprise AI Orchestration