Large Language Models (LLMs) such as GPT, Claude and Gemini have dramatically transformed artificial intelligence. From generating natural text to assisting in code and research, these models rely on one fundamental process: autoregressive generation predicting text one token at a time. However, this sequential nature poses a critical efficiency bottleneck. Generating text token by token limits both training speed and inference efficiency making it challenging to scale LLMs to larger contexts or real-time applications.

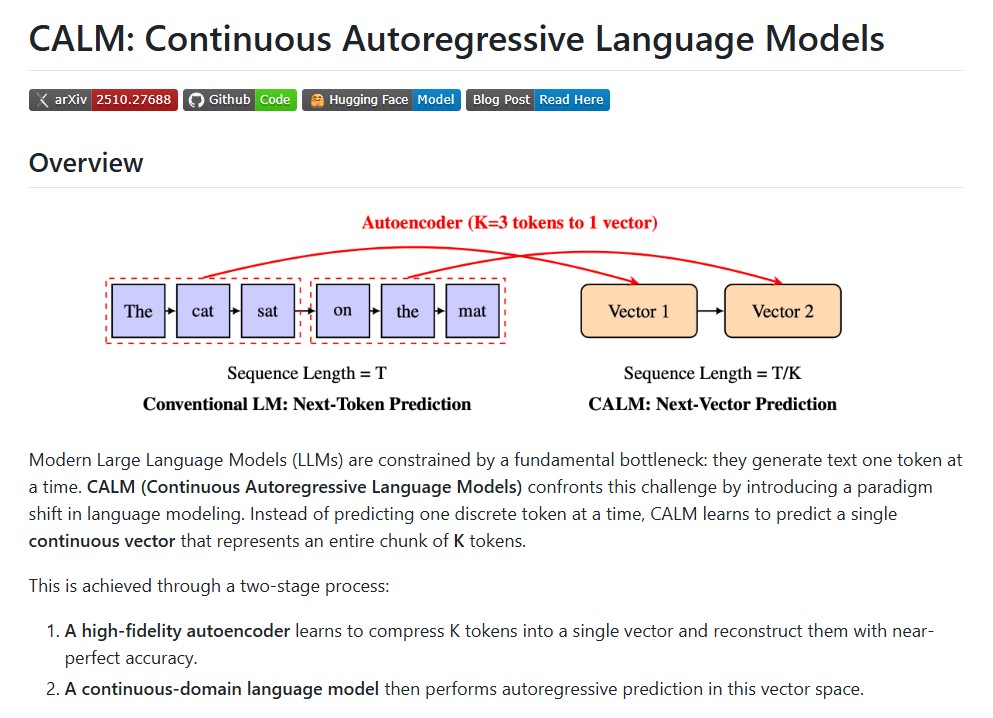

To overcome this challenge, researchers introduced CALM (Continuous Autoregressive Language Models), a revolutionary framework that redefines how language models generate text. Instead of predicting one discrete token at a time, CALM predicts a continuous vector representing an entire chunk of tokens drastically reducing the number of autoregressive steps required.

Developed by Shaocheng Ze and collaborators, CALM proposes a two-stage architecture that combines autoencoding, continuous representation learning and energy-based modeling. This approach not only improves efficiency but also opens new possibilities in scaling, evaluation and control of LLMs.

The Core Idea: From Discrete Tokens to Continuous Chunks

Traditional language models operate in the discrete token space – each prediction is a single token (word, subword or character). CALM introduces a fundamental shift by operating in a continuous latent space where each vector corresponds to a chunk of K tokens.

This design brings two major benefits:

- Efficiency: Reduces the number of autoregressive prediction steps by a factor of K.

- Information Density: Allows the model to reason about larger semantic units at once instead of focusing on token-level syntax.

By modeling language continuously, CALM can process meaning more holistically generating text that is not only faster but also semantically richer.

The Two-Stage Process of CALM

The CALM framework is built upon a two-stage training process that ensures high-fidelity representation and efficient generative modeling.

Stage 1: Training the Autoencoder

In the first stage, a high-fidelity autoencoder is trained on billions of tokens to learn how to compress a sequence of K tokens into a single latent vector and reconstruct them with near-perfect accuracy.

This component acts as the “translator” between discrete language and continuous representation. It ensures that no information is lost during compression allowing the subsequent language model to operate effectively in the latent space.

Stage 2: Training the Continuous Language Model

Once the autoencoder is trained, it trains a continuous-domain language model that predicts the next latent vector based on previous ones. Instead of working with token probabilities, it uses energy-based training, a powerful likelihood-free method that optimizes the model based on the energy landscape of the latent representations.

During evaluation, the model’s performance is measured using a new metric called BrierLM, designed specifically for calibrated and likelihood-free evaluation in continuous language modeling. CALM models consistently achieve superior BrierLM scores compared to traditional autoregressive Transformers.

Key Features and Innovations

1. Ultra-Efficient by Design

By predicting one vector for multiple tokens, CALM reduces both training and inference costs dramatically. Fewer autoregressive steps mean faster generation without sacrificing accuracy or fluency.

2. Semantic Bandwidth (K): A New Scaling Axis

It introduces the idea of semantic bandwidth, defined by the chunk size K. Instead of scaling only by model size or dataset size, CALM allows researchers to scale the amount of information processed per step. This offers a new dimension in AI model scalability.

3. Likelihood-Free Generative Framework

Operating in a continuous domain requires new mathematical tools. CALM uses energy-based modeling – a likelihood-free generative approach to evaluate and train models based on relative energy differences rather than fixed probability distributions. This allows more flexible, stable and theoretically grounded optimization.

4. The BrierLM Metric

To evaluate CALM’s output quality, researchers developed BrierLM, a new metric for assessing model calibration and performance in a likelihood-free setting. It provides a more nuanced understanding of model accuracy than traditional log-likelihood metrics.

5. Temperature Sampling for Controlled Generation

CALM integrates temperature sampling, which adjusts the “creativity” of the model’s output without altering its architecture. This feature enables controlled text generation crucial for balancing accuracy and diversity in AI systems.

Performance and Benchmarks

In extensive experiments, CALM demonstrated impressive efficiency and performance gains. For example:

| Model | Parameters | BrierLM Score |

| Autoencoder | 75M | — |

| CALM-M | 371M | 5.72 |

| CALM-L | 735M | 6.58 |

| CALM-XL | 1.82B | 8.53 |

The results show that CALM not only outperforms traditional autoregressive models in BrierLM performance but also achieves this with far fewer autoregressive steps. This efficiency makes CALM ideal for large-scale text generation, translation and real-time applications.

Comparison with Traditional Transformers

Traditional autoregressive Transformers predict one token at a time, leading to:

- High computational cost

- Long latency for long-form text generation

- Redundant step-by-step dependencies

In contrast, CALM’s chunk-based continuous modeling significantly reduces redundancy allowing faster text generation without losing coherence. It also provides a flexible foundation for integrating advanced architectures like diffusion and flow matching models which further improve performance in generative AI tasks.

Practical Implementation

To reproduce CALM’s results, researchers recommend a large-scale setup using approximately 15 billion tokens for autoencoder training. The implementation process includes:

- Cloning the repository and installing dependencies.

- Training the autoencoder using the provided shell script (train_autoencoder.sh).

- Training CALM with the energy-based loss function using train_energy.sh.

- Evaluating the model with eval_energy.sh to compute BrierLM scores.

For comparison, baseline autoregressive Transformers can also be trained with the same data to highlight CALM’s efficiency advantage.

Future Implications

CALM introduces a paradigm shift in how language models are trained, scaled, and evaluated. By compressing discrete text into continuous representations, it opens new directions for:

- Scalable LLMs that process entire paragraphs per step.

- Cross-modal AI that can integrate visual and textual information seamlessly.

- Energy-efficient AI systems suitable for real-time applications on limited hardware.

As researchers continue to refine continuous autoregressive modeling, CALM lays the foundation for faster, smarter and more adaptive large language models that redefine the future of natural language generation.

Conclusion

CALM (Continuous Autoregressive Language Models) represents a breakthrough in language modeling efficiency. By combining high-fidelity autoencoding, energy-based training and continuous representation learning, it enables LLMs to generate coherent text far more efficiently than traditional token-level models.

Through its innovative concepts like semantic bandwidth and the BrierLM metric, CALM establishes a new frontier for scalable and high-performance AI systems. It not only optimizes the way models are trained but also redefines how machines understand and generate human language at scale.

With CALM, the path toward real-time, high-bandwidth and context-aware AI is not a distant vision – it is already becoming a reality.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- Supervised Reinforcement Learning: A New Era of Step-Wise Reasoning in AI

- Context Engineering 2.0: Redefining Human–Machine Understanding

- OpenAI Evals: The Framework Transforming LLM Evaluation and Benchmarking

- Skyvern: The Future of Browser Automation Powered by AI and Computer Vision

- Steel Browser: The Open-Source Browser API Powering AI Agents and Automation

2 thoughts on “CALM: Revolutionizing Large Language Models with Continuous Autoregressive Learning”