As artificial intelligence continues to move beyond large, resource-heavy models, the demand for lightweight, efficient, and responsibly developed language models has grown rapidly. Developers today need AI systems that can run on laptops, small servers, or private cloud infrastructure without sacrificing performance, safety, or flexibility. Addressing this demand, Google DeepMind introduced Gemma 3, a new generation of open models built on the same research foundations as Gemini.

Among these models, Gemma-3-1B-IT stands out as a compact yet capable instruction-tuned language model designed for real-world text generation tasks. With open weights, multilingual support, strong safety evaluations, and a modern architecture, Gemma-3-1B-IT brings advanced AI capabilities to environments with limited computational resources.

What Is Gemma-3-1B-IT?

Gemma-3-1B-IT is an instruction-tuned variant of the Gemma 3 family, developed by Google DeepMind. The “IT” suffix refers to instruction tuning, meaning the model is optimized to follow user prompts in a conversational and helpful manner.

With 1 billion parameters, this model is designed to balance performance and efficiency. It supports text input and generates text output, making it suitable for chatbots, content generation, summarization, and educational tools. Unlike proprietary systems, Gemma-3-1B-IT offers open access to model weights, subject to Google’s responsible usage license.

Key Features of Gemma-3-1B-IT

Lightweight and Efficient Design

Gemma-3-1B-IT is optimized for environments where compute and memory are constrained. Its small size allows it to run on consumer-grade GPUs, desktops, and even laptops with quantization, democratizing access to modern AI capabilities.

Large Context Window for Its Class

The 1B model supports a 32,000-token input context, which is substantial for a lightweight model. This enables it to handle longer conversations, documents, and prompts more effectively than many models of similar size.

Multilingual Capabilities

Gemma 3 models are trained on data covering more than 140 languages, allowing Gemma-3-1B-IT to generate and understand text across a wide range of global languages. This makes it useful for international applications, language learning tools, and multilingual content platforms.

Instruction-Tuned Behavior

As an instruction-tuned model, Gemma-3-1B-IT is well-suited for interactive use cases. It responds clearly to prompts, follows structured instructions, and produces user-aligned outputs for tasks such as explanations, summaries, and creative writing.

Training Data and Methodology

Gemma-3-1B-IT was trained on approximately 2 trillion tokens, drawn from a diverse mix of high-quality data sources. These include:

- Web documents across multiple domains

- Programming code

- Mathematical and scientific text

- Multilingual content

- Image-related text for multimodal grounding

To ensure responsible AI development, Google applied rigorous data filtering, including child safety filtering, sensitive data removal, and content quality checks. These steps help reduce harmful outputs and improve reliability.

Architecture and Implementation

The model is based on a modern transformer architecture and was trained using JAX and ML Pathways, Google’s large-scale AI training infrastructure. Training was performed on TPU v4 and v5 hardware, enabling efficient large-scale optimization even for multimodal models.

Gemma-3-1B-IT is compatible with Hugging Face Transformers (version 4.50.0 and above), allowing developers to easily integrate it into existing workflows using standard APIs.

Performance and Evaluation

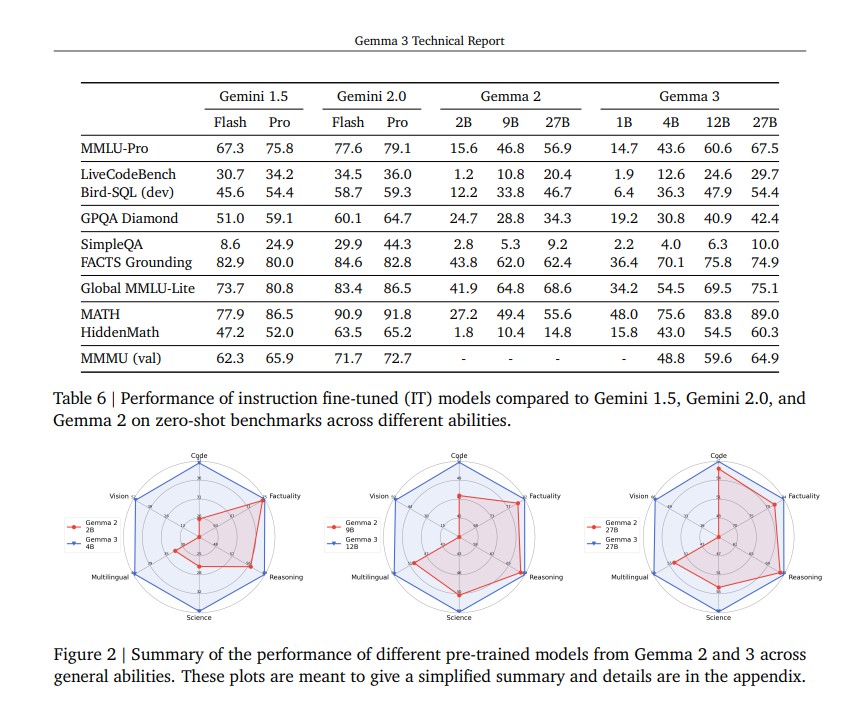

Gemma 3 models were evaluated across a wide range of benchmarks covering:

- Reasoning and factual knowledge

- STEM and code understanding

- Multilingual language tasks

- Ethical and safety-related behavior

Although the 1B variant is smaller than its 4B, 12B, and 27B counterparts, it demonstrates competitive performance for its size, especially in general reasoning, basic coding understanding, and multilingual generation.

In safety evaluations, Gemma-3-1B-IT showed significant improvements over previous Gemma versions, producing minimal policy violations even when tested without safety filters. This highlights Google’s emphasis on responsible AI development.

Practical Use Cases

Gemma-3-1B-IT is suitable for a wide range of applications, including:

- Chatbots and conversational assistants

- Content generation and drafting

- Text summarization

- Educational and tutoring tools

- Language learning applications

- Research and experimentation in NLP

- Lightweight enterprise AI systems

Its compact size makes it especially useful for on-device AI, private deployments, and startups that cannot rely on large proprietary models.

Limitations

Despite its strengths, Gemma-3-1B-IT has limitations common to all language models:

- It may produce incorrect or outdated factual information

- It can struggle with highly complex or ambiguous tasks

- It relies on patterns learned during training rather than real-time knowledge

- Subtle nuance, sarcasm, or advanced reasoning may be challenging

Developers should treat outputs as assistive rather than authoritative and apply human oversight in critical applications.

Conclusion

Gemma-3-1B-IT represents a strong step forward in accessible, responsible, and efficient open-source AI. By combining instruction tuning, multilingual support, safety-focused training, and lightweight deployment capabilities, Google DeepMind has created a model that fits modern development needs without excessive resource demands.

For developers, researchers, and organizations seeking a compact yet capable language model backed by robust evaluation and responsible design, Gemma-3-1B-IT is a practical and forward-looking choice in the evolving AI ecosystem.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- LangChain: The Ultimate Framework for Building Reliable LLM and AI Agent Applications

- Dolphin 2.9.1 Yi 1.5 34B : A Complete Technical and Practical Overview

- GLM-4.7: A New Benchmark in Agentic Coding, Reasoning and Tool-Driven AI

- Llama-3.2-1B-Instruct: A Compact, Multilingual and Efficient Open Language Model

- DistilGPT2: A Lightweight and Efficient Text Generation Model

1 thought on “Gemma-3-1B-IT: Google’s Lightweight Multimodal Open-Source Language Model Explained”