Artificial Intelligence has reached an inflection point. From research labs to enterprise applications, transformer architectures and their successors have become the driving force of innovation. To truly understand this evolution and where it’s headed, few voices are as authoritative as Antonio Gulli who has now released a landmark book that spans the theory, practice and future directions of modern AI.

This six-part book is not only a comprehensive guide to transformers and large language models (LLMs) but also a vision of what lies ahead in reasoning, scaling and alignment. It blends technical rigor with practical insights, making it equally valuable for researchers, engineers and AI enthusiasts. Even more inspiring, all royalties from the book are being donated to Save the Children, aligning cutting-edge AI knowledge with a global humanitarian cause.

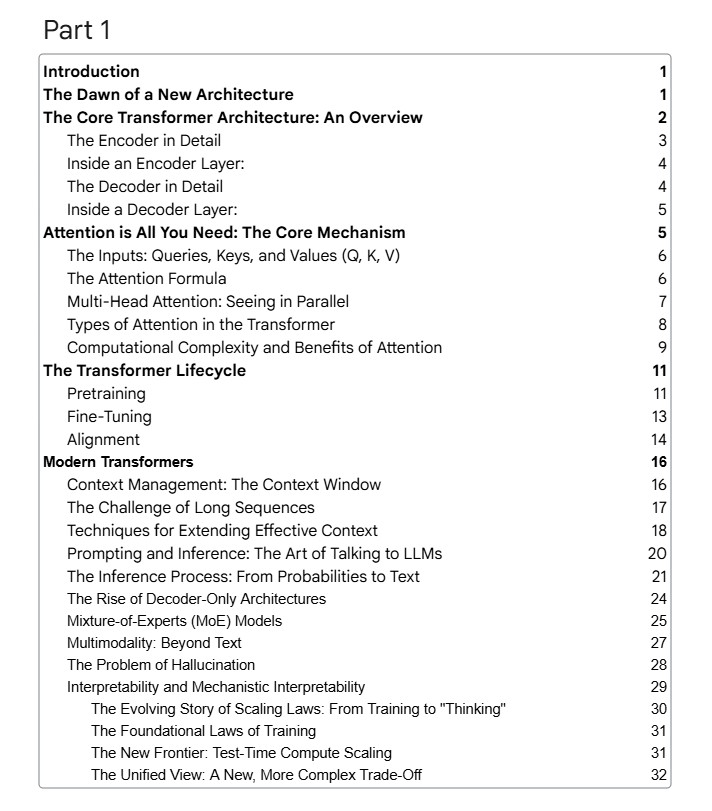

Table of Contents

The Architecture of the Book

The book is divided into six distinct parts, each focusing on a critical layer of AI evolution. Together, they build a narrative that takes the reader from the dawn of the transformer to the advanced reasoning systems and frontier models of 2025.

Part 1: The Dawn of a New Architecture

This opening section takes the reader through the origins of the transformer architecture – the foundation of today’s LLMs. It begins with the encoder-decoder framework, explores the mechanics of attention (queries, keys and values), and explains the scaling benefits that propelled transformers to dominance. Key topics include:

- Multi-head attention and its role in parallel processing

- Computational complexity and the advantages of attention

- Pretraining, fine-tuning, and alignment workflows

- The rise of decoder-only architectures and Mixture-of-Experts (MoE)

- Challenges such as hallucinations and interpretability

By the end of Part 1, readers gain a complete picture of how transformers work and why they revolutionized AI.

Part 2: Reasoning and Alignment in AI

The second part dives into the reasoning abilities of AI systems and how alignment is achieved. It explores how inference-time scaling allows models to “think deeper” on demand, introducing strategies like Chain of Thought (CoT), Few-Shot prompting and Tree of Thoughts (ToT).

It also provides an in-depth treatment of alignment techniques, including:

- Reinforcement Learning from Human Feedback (RLHF)

- Reinforcement Learning from AI Feedback (RLAIF)

- Pure reinforcement learning and algorithms like PPO and DPO

- Supervised fine-tuning (SFT) and distillation methods

The section concludes with a comparative analysis of leading models in 2025, balancing proprietary titans against the open-source insurgency and introducing readers to benchmark reasoning capabilities.

Part 3: Hands-On Transformer Implementation

Theory is nothing without practice, and Part 3 provides a code-driven exploration of transformer models. From initialization and hyperparameters to multi-headed attention, transformer blocks and training logic, readers are guided through the end-to-end process of building an attention-based language model.

This section also includes practical components such as evaluation logic, data preprocessing and generation helpers. For practitioners, this is the part of the book that turns abstract architecture into working code.

Part 4: Modern Refinements in Architecture

The fourth part addresses architectural refinements that power next-generation models. Some highlights include:

- Mixture-of-Experts (MoE) for scaling efficiency

- Grouped-Query Attention (GQA) for reduced computation

- Rotary Position Embeddings (RoPE) to improve positional representation

- RMSNorm and SwiGLU as alternatives to traditional normalization and activation functions

- KV Caching for faster inference in large-scale deployments

Accompanied by detailed code breakdowns, this section bridges advanced concepts with real-world implementation.

Part 5: Flagship Architectures of 2025

Part 5 reads almost like a state-of-the-art survey, spotlighting the flagship models defining 2025. These include:

- DeepSeek-V3

- OLMo 2

- Gemma 3

- Llama 4

- Qwen3

- SmolLM3

- Kimi2 from China

- GTP-OSS, OpenAI’s open-source initiative

This section doesn’t just introduce these models; it dissects their unique innovations, from sparse MoEs to latent attention and sliding windows, helping readers see where the industry is heading.

Part 6: The Gemma 3 Case Study

The final part provides a deep technical dive into Gemma 3, a flagship model of the era. With full coverage of its configuration files, tokenizers, parallelization strategies and model definitions, this section demonstrates how state-of-the-art architectures are engineered for real-world use.

Read the Complete Guide here

https://docs.google.com/document/d/1WUk_A3LDvRJ8ZNvRG–vhI287nDMR-VNM4YOV8mctbI/preview?tab=t.u5rxkidg8s5c

Why This Guide Matters

Antonio Gulli’s book is more than a technical manual—it’s a chronicle of AI’s transformation. It connects the dots between academic breakthroughs, industrial deployments, and future possibilities, making it a valuable resource for:

- Researchers seeking a structured overview of the field

- Engineers and data scientists looking for practical implementation guides

- Tech leaders wanting clarity on scaling, reasoning, and alignment

- Students and learners eager to understand the foundations of modern AI

Crucially, by donating all royalties to Save the Children, the book embodies a commitment to ensure that AI serves not just technological progress but also human progress.

Final Thoughts

We are living in the age of transformers where architectures evolve rapidly, reasoning frameworks emerge, and industry models compete to set new benchmarks. Antonio Gulli’s guide captures this dynamic moment with both depth and accessibility.

Whether you want to grasp attention mechanisms, explore alignment techniques, transformers or learn from code-level implementations, this book provides the tools and insights you need. And as AI moves closer to artificial general intelligence, resources like this will be essential for navigating both the opportunities and the challenges ahead.

This release is a must-read for anyone serious about understanding the transformers architecture, reasoning and future of AI.

Related Reads

- Find the Highest Altitude in Python – LeetCode75 Explained

- Mastering the Longest Subarray of 1’s After Deleting One Element in Python – LeetCode 75 Explained

- Mastering the Max Consecutive Ones III Problem in Python – LeetCode 75 Explained

- AIPython & Online Learning Resources for Artificial Intelligence: Foundations of Computational Agents (3rd Edition)

- Agentic Design Patterns – A Hands-On Guide to Building Intelligent Systems by Google’s Antonio Gulli

4 thoughts on “Transformers, Reasoning & Beyond: A Powerful Deep Dive with Antonio Gulli”