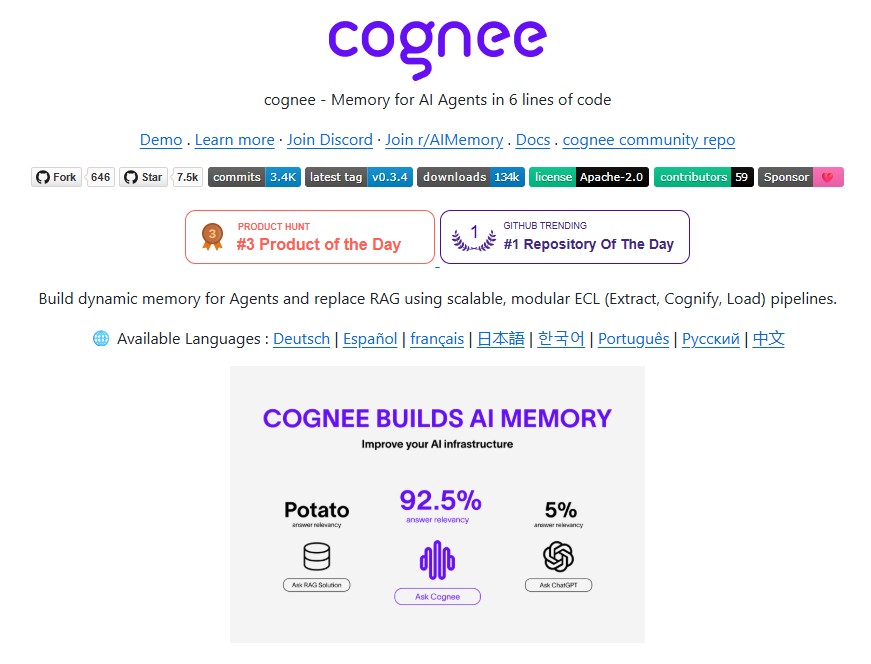

Artificial Intelligence is evolving rapidly, but one of the biggest challenges for developers is building agents that remember, reason and adapt. Traditional RAG (Retrieval-Augmented Generation) systems often fall short when handling context, scalability and precision. That’s where Cognee comes in.

It is an open-source framework designed to provide AI agents with memory using a unique ECL (Extract, Cognify, Load) pipeline. With just a few lines of code, developers can transform conversations, files and multimedia into a knowledge graph and vector-based memory layer.

In this blog, we’ll explore what it is and why it matters, its key features, how to get started, the benefits of self-hosted versus hosted deployment and some real-world applications that showcase its power.

Why Cognee?

Most RAG systems work by fetching relevant chunks from a knowledge base when prompted. While effective for simple lookups, they often lack dynamic memory and contextual reasoning. It replaces this static approach with a graph and vector-powered memory system that is:

- Scalable – handles millions of data points efficiently

- Modular – customizable pipelines for different use cases

- Precise – increases the accuracy of AI responses

- Cost-effective – reduces compute and developer effort

By combining knowledge graphs and vector embeddings, it enables agents to “think” with memory instead of just retrieving snippets.

Key Features

Whether you’re a researcher, developer, or enterprise team, it offers a wide range of features:

- AI Memory Layer – store and interconnect documents, conversations, images and audio.

- Graph & Vector Hybrid – unify structured knowledge graphs with unstructured vector search.

- 30+ Data Source Integrations – ingest data seamlessly from different platforms.

- Pythonic Pipelines – simple, readable pipelines for data processing and search.

- Customizable Architecture – extend with your own tasks, APIs and workflows.

- Self-hosted & Hosted Options – choose between open-source control or managed UI.

This makes it a drop-in replacement for RAG systems while providing long-term memory for agents.

Getting Started

It supports Python 3.10 – 3.12 and can be installed easily:

uv pip install cognee

Or using pip / poetry.

Basic Example in Python

import cognee

import asyncio

async def main():

await cognee.add("Cognee turns documents into AI memory.")

await cognee.cognify()

await cognee.memify()

results = await cognee.search("What does cognee do?")

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Output:

Cognee turns documents into AI memory.

CLI Usage

cognee-cli add "Cognee turns documents into AI memory."

cognee-cli cognify

cognee-cli search "What does cognee do?"

Or launch the UI with:

cognee-cli -ui

Deployment Options

It offers flexibility depending on your needs:

- Self-hosted (Open Source)

- Full control over data

- Run locally or on your servers

- Ideal for privacy-focused projects

- Hosted Platform (Cogwit)

- Managed UI and enterprise-ready features

- Automatic updates, analytics, and security

- Easy setup with API key integration

This dual approach makes it suitable for both individual developers and large enterprises.

Real-World Applications

It’s memory-first approach opens doors for a wide range of use cases:

- Conversational AI Agents – build chatbots that remember past interactions.

- Research Assistants – organize and query academic papers, datasets and notes.

- Enterprise Knowledge Bases – connect internal documents, manuals and logs.

- Personal Productivity Tools – manage emails, notes and tasks with AI memory.

- GraphRAG Systems – enhance reasoning by combining vector retrieval with graph reasoning.

Community and Contributions

It is backed by a growing open-source community with 7.5k+ GitHub stars, 670+ forks and 70+ contributors.

Conclusion

It is redefining how AI agents handle memory, reasoning and context. By replacing traditional RAG systems with a graph-vector hybrid memory layer, it allows developers to build smarter, more reliable and cost-efficient AI systems.

Whether you’re experimenting locally or deploying enterprise-grade solutions, it offers the flexibility, scalability and intelligence needed to power the next generation of AI agents.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- Join the 5-Day AI Agents Intensive Course with Google

- Docling: Simplifying Document Parsing and AI-Ready Data Processing

- Powerful Microsoft Agent Framework: Build, Orchestrate and Deploy AI Agents with Python and .NET

- Reorder Routes to Make All Paths Lead to the City Zero – LeetCode75 Python Solution Explained

- Evaluate Division – LeetCode75 Python Solution Explained

5 thoughts on “Cognee: Powerful Memory for AI Agents in Just 6 Lines of Code”