What is a Decision Tree in Machine Learning?

A Decision Tree in Machine Learning is a popular predictive model that makes decisions by asking a series of yes-or-no questions. It works like a flowchart, where each internal node represents a decision based on a specific feature, each branch corresponds to an outcome of the decision, and each leaf node represents a final prediction or classification.

This tree-like structure allows the model to break down complex problems into simpler, more manageable parts. By evaluating conditions and rules derived from input data, decision trees guide the machine step by step toward an outcome whether it’s classifying a customer, predicting sales, or diagnosing medical conditions. Their intuitive design makes them easy to understand, interpret, and visualize, which is why they’re widely used across industries like finance, healthcare, marketing, and more.

It’s commonly used for classification (e.g., spam or not) and regression (e.g., predicting house price). It’s popular because it’s easy to interpret and visualize.

Table of Contents

How Does a Decision Tree Work?

A Decision Tree works by splitting data into smaller and more specific subsets based on input features, essentially creating a path of decisions that lead to a final output. At each step of the tree — known as a node, the algorithm asks a question about a particular feature of the data. Based on the answer (typically a binary yes/no), the data is split and passed down the appropriate branch. This process continues recursively until the model reaches a leaf node, which contains the final prediction — either a class label (in classification) or a numerical value (in regression).

For example:

- Is age > 30?

- Yes → Is income > 50k? → Predict “Yes”

- No → Predict “No”

This process continues until a stopping condition like max depth or minimum samples is met.

Types of Decision Trees

1. Classification Trees

Classification Trees are used when the output variable is categorical — meaning the goal is to assign the input data to a specific category or class. These trees help in answering questions like “Is this email spam?”, “Will the customer churn?”, or “Is the transaction fraudulent?”

At each decision node, the tree splits the data based on the feature that provides the highest information gain or the greatest reduction in Gini impurity. The final prediction, found at the leaf node, is the class label that fits the majority of training examples in that path.

Examples of classification problems:

- Predicting if a loan applicant is approved or denied

- Classifying emails as spam or not spam

- Diagnosing whether a patient is healthy or at risk

Classification Trees are especially useful in binary classification, but they can also handle multi-class classification tasks efficiently.

2. Regression Trees

Regression Trees are used when the output variable is continuous — meaning you’re trying to predict a numeric value instead of a category. These trees aim to minimize the Mean Squared Error (MSE) or Mean Absolute Error (MAE) in the predicted values.

Instead of assigning class labels at the leaf nodes, regression trees assign a real-number value, usually the average of all training samples in that leaf. This makes them perfect for solving problems where precision in prediction is critical.

Examples of regression problems:

- Predicting the price of a house based on location, size, and amenities

- Forecasting future sales of a product

- Estimating temperature or air pollution levels over time

Regression Trees capture non-linear relationships between features and output, and are often used as a base model in advanced ensemble techniques like Gradient Boosting Regressors.

Real-Life Example

Let’s say you want to predict if a fruit is an apple or an orange:

- Is it orange in color?

- Yes → Orange

- No → Apple

This simple logic is how a Decision Tree in Machine Learning operates.

Advantages of Decision Trees

- Easy to understand and explain.

- Handles both numerical and categorical data.

- No need for scaling or normalization.

- Fast training compared to other algorithms.

Limitations and How to Overcome Them

- Overfitting: Trees can become too complex. Use pruning or max depth to control this.

- Instability: Small data changes can affect the tree. Use ensemble models like Random Forest or Gradient Boosting for better stability.

Common Use Cases of Decision Trees

- Loan approval prediction

- Diagnosing diseases

- Customer churn analysis

- Credit scoring

- Spam email detection

- Recommender systems

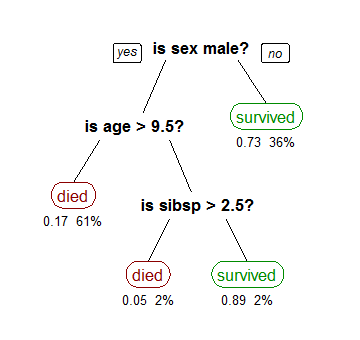

Visual Example

Decision Tree in Machine Learning example for Titanic dataset.

Further Learning and Resources

Conclusion

A Decision Tree in Machine Learning is an intuitive and powerful algorithm for decision-making tasks. Whether you’re working on customer behavior, credit risk, or product recommendations, decision trees provide a solid foundation for building intelligent systems.

Related Read

What is Random Forest Regression?