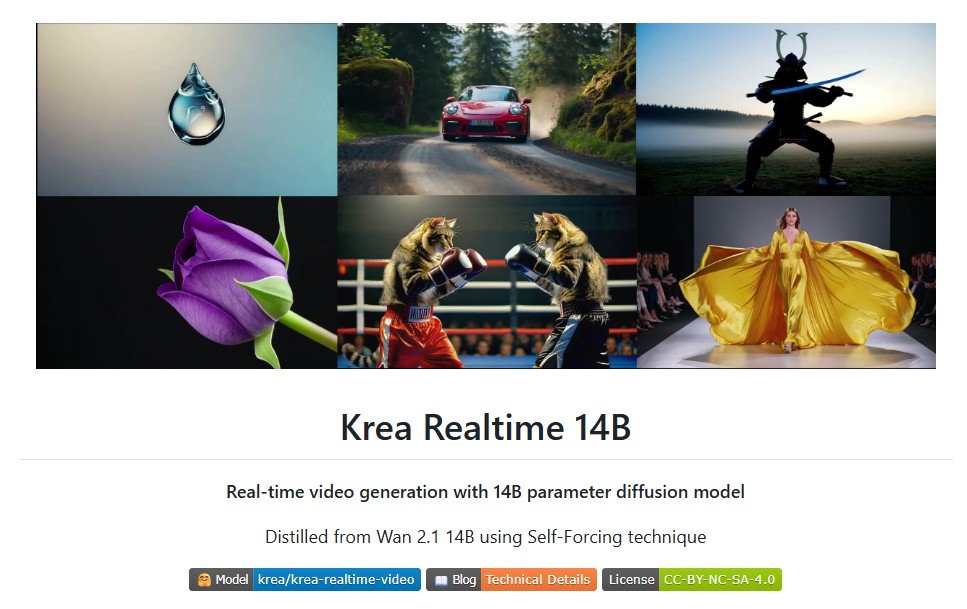

The field of artificial intelligence is undergoing a remarkable transformation and one of the most exciting developments is the rise of real-time video generation. From cinematic visual effects to immersive virtual environments, AI is rapidly blurring the boundaries between imagination and reality. At the forefront of this innovation stands Krea Realtime 14B, an advanced open-source video diffusion model capable of generating videos in real time.

Developed by Krea-AI, Krea Realtime 14B represents a significant breakthrough in both performance and accessibility. The model is distilled from Wan 2.1 14B using a pioneering method known as Self-Forcing distillation which converts traditional diffusion-based video models into autoregressive systems optimized for speed. This innovation allows AI to generate realistic video sequences at frame rates previously thought impossible for large-scale models.

The Evolution of Video Generation

AI video generation has historically faced one major bottleneck: latency. Conventional diffusion models require multiple iterative steps to produce high-quality frames, making them computationally expensive and too slow for real-time applications.

Krea Realtime 14B breaks this barrier by leveraging Self-Forcing, a technique that enables the model to predict frames sequentially and continuously mimicking how autoregressive text models generate language. This shift from static diffusion to dynamic autoregression allows Krea’s system to perform real-time video rendering, transforming the user experience from passive waiting to active interaction.

The scalability of this approach is what makes it groundbreaking. While previous implementations of Self-Forcing were confined to smaller networks, Krea successfully scaled the method to a 14-billion parameter model achieving performance that balances precision, quality and real-time responsiveness.

Core Features of Krea Realtime 14B

1. Real-Time Video Diffusion at Scale

Krea Realtime 14B is capable of generating videos at 11 frames per second (fps) on an NVIDIA B200 GPU, with only four inference steps. This makes it one of the first diffusion models to reach truly interactive speeds while maintaining cinematic visual fidelity.

2. Advanced Memory and Cache Management

Real-time inference at this scale demands efficient memory utilization. The Krea model employs optimized KV cache management, consuming around 25GB of VRAM per GPU. This innovation ensures that the system can handle high-resolution video generation without overwhelming hardware limits.

3. Multiple Attention Backends

Krea supports several attention mechanisms, including Flash Attention 4 and SageAttention, offering users flexibility to optimize the model based on their hardware setup. For developers working with NVIDIA B200 GPUs, Flash Attention delivers the best performance while SageAttention ensures compatibility with a wider range of systems including the H100 and RTX 5xxx series.

4. Dual Operation Modes

Krea Realtime 14B operates in two primary modes — real-time streaming and offline batch generation. The WebSocket-based streaming server allows users to interact with the model live, sending prompts and watching videos materialize frame by frame. For offline users, the batch sampling mode offers high-quality video generation without the need for a continuous server connection.

5. Video-to-Video Transformation

Beyond text-to-video generation, Krea also supports video-to-video transformation where existing footage can be enhanced, restyled or reinterpreted using AI. This feature is particularly useful for creative professionals who wish to integrate generative AI into post-production workflows.

Technical Architecture

The Krea Realtime 14B model builds upon the Wan 2.1 text-to-video framework, incorporating techniques from LightX2V, a timestep-distilled checkpoint designed for efficient inference. Its Self-Forcing architecture converts diffusion into a predictive loop, reducing redundant computations and enabling consistent frame-to-frame coherence.

This architectural design ensures stability in long-form video generation, allowing sequences to maintain temporal continuity — a long-standing challenge in video synthesis. Krea’s engineering team implemented numerous optimizations to make this possible, including advanced model parallelism, GPU-specific compilation with torch.compile and efficient handling of attention maps.

The model also supports autonomous parameter tuning through YAML-based configuration files. Developers can adjust settings like frame dimensions, seed values and the number of attention blocks to balance quality and speed according to project needs.

Setup and Accessibility

Krea-AI has made deploying Krea Realtime 14B remarkably straightforward for developers. The system requires:

- GPU: Minimum 40GB VRAM (B200, H100 or RTX 5xxx series recommended)

- OS: Linux (Ubuntu preferred)

- Python: Version 3.11 or higher

- Storage: Approximately 30GB for model checkpoints

The installation involves setting up a Python environment, installing the appropriate attention backend and downloading model weights via the Hugging Face CLI. From there, users can either launch a WebSocket server using release_server.py for interactive generation or run sample.py for offline sampling.

The WebSocket server also includes a web-based interface (release_demo.html), allowing users to enter prompts, tweak parameters, and visualize video output in real time – all from a simple browser window.

Impact on the AI Video Landscape

Krea Realtime 14B is more than a technical milestone; it’s a paradigm shift. By delivering high-quality real-time video generation in an open-source format, Krea is lowering the barrier to entry for AI-driven creativity. Independent developers, researchers, and artists can now experiment with a model that rivals proprietary systems but remains transparent and modifiable.

This democratization of real-time video AI opens the door to new possibilities from interactive storytelling and virtual filmmaking to live visual art and gaming environments. It also sets a foundation for future research into autonomous video agents, capable of understanding and generating visual scenes dynamically in response to user interaction or environmental cues.

Conclusion

Krea Realtime 14B marks a pivotal moment in the evolution of generative AI. It successfully merges scale, speed, and sophistication, offering an open-source pathway to real-time video creation. By distilling Wan 2.1 through Self-Forcing, Krea-AI has achieved something extraordinary – a 14-billion parameter model that runs efficiently enough for interactive use while preserving the visual richness of diffusion models.

As AI continues to shape creative industries, Krea Realtime 14B stands out as a model that not only showcases technical excellence but also redefines accessibility. It is a powerful reminder that the future of AI innovation lies not just in larger models, but in smarter more efficient systems that empower creators everywhere.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- LongCat-Video: Meituan’s Groundbreaking Step Toward Efficient Long Video Generation with AI

- HunyuanWorld-Mirror: Tencent’s Breakthrough in Universal 3D Reconstruction

- MiniMax-M2: The Open-Source Revolution Powering Coding and Agentic Intelligence

- MLOps Basics: A Complete Guide to Building, Deploying and Monitoring Machine Learning Models

- Reflex: Build Full-Stack Web Apps in Pure Python — Fast, Flexible and Powerful

3 thoughts on “Krea Realtime 14B: Redefining Real-Time Video Generation with AI”