In the rapidly expanding world of artificial intelligence, data storage and retrieval efficiency have become major bottlenecks for scalable AI systems. The growth of Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs) has further intensified the demand for fast, private and space-efficient vector databases. Traditional systems like FAISS or Milvus while powerful, are resource-heavy and often depend on cloud infrastructure leading to rising costs and privacy concerns.

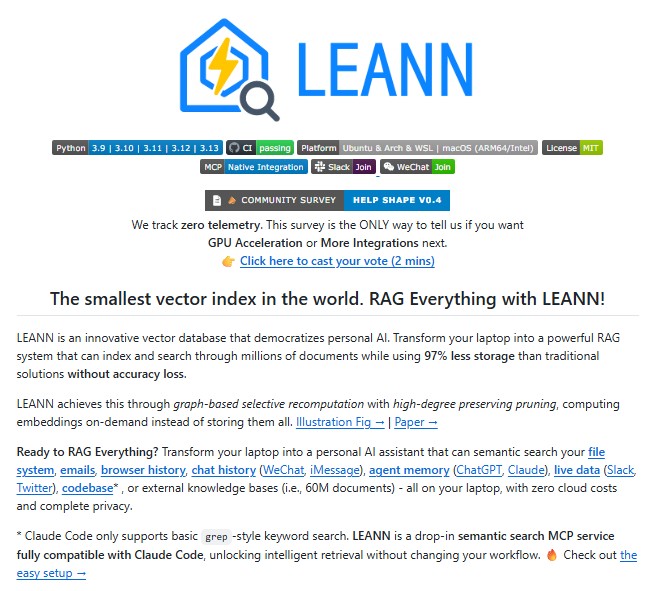

Enter LEANN, an open-source innovation that redefines how vector databases operate. Developed by researchers at Berkeley Sky Computing Lab, LEANN positions itself as “the smallest vector index in the world.” It delivers groundbreaking storage efficiency achieving up to 97% storage savings compared to conventional vector databases all without sacrificing accuracy.

This blog explores how LEANN transforms your laptop into a personal AI powerhouse capable of performing high-speed, offline semantic search while ensuring total data privacy.

What Is LEANN?

LEANN stands for Low-Storage Efficient Approximate Nearest Neighbor index. It’s a revolutionary vector database and RAG engine designed for personal AI systems. What makes it special is its ability to index and search millions of documents, emails or messages using only a fraction of the storage that traditional systems require.

In simple terms, LEANN acts as a memory engine for AI applications allowing your models, assistants, and chatbots to access relevant information instantly from local or live sources. It works entirely offline ensuring your data never leaves your laptop. No cloud services, no external APIs and no privacy compromises.

Why LEANN Is a Game-Changer ?

1. Unmatched Storage Efficiency

Traditional vector databases store embeddings for every data chunk, consuming massive amounts of space. For instance, indexing 60 million text chunks in FAISS can occupy over 200 GB of disk space. LEANN does the same using just 6 GB, thanks to its graph-based selective recomputation method.

Instead of storing all embeddings, it dynamically recomputes only the necessary ones during searches, saving up to 97% of storage without any accuracy loss.

2. Complete Data Privacy

In an era where cloud-based AI systems raise concerns about data ownership and security, LEANN takes an offline-first approach. Your emails, chat histories, browser data, or codebases remain fully private, stored and processed locally. There’s no cloud dependency, meaning your personal or organizational data stays secure and compliant.

3. Scalability and Portability

LEANN’s lightweight architecture makes it ideal for large-scale indexing across diverse datasets. Whether you’re working with millions of emails, years of browser history, or thousands of PDF files, LEANN handles it seamlessly. Moreover, since indexes are portable, you can transfer your entire AI memory between devices or even share it with collaborators easily.

4. Accuracy Without Compromise

Despite its aggressive storage optimization, LEANN maintains the same search quality as traditional systems. Benchmarks show that it matches the accuracy of FAISS and DiskANN while using minimal space and computational resources.

For example:

| Dataset | Traditional DB | LEANN | Storage Saved |

| Wikipedia (60M chunks) | 201 GB | 6 GB | 97% |

| Chat history (400K) | 1.8 GB | 64 MB | 97% |

| Email data (780K) | 2.4 GB | 79 MB | 97% |

The Core Technology Behind LEANN

LEANN’s innovation lies in how it organizes and retrieves embeddings. The system introduces several advanced techniques:

1. Graph-Based Selective Recomputation

Instead of storing every embedding, LEANN uses a graph structure to connect data points intelligently. It only computes embeddings for nodes relevant to the current query, significantly reducing both storage and computation costs.

2. High-Degree Preserving Pruning

This technique removes redundant nodes in the graph while preserving key “hub” nodes that maintain strong connectivity. As a result, LEANN can search through massive datasets quickly without accuracy degradation.

3. Dynamic Batching and Two-Level Search

It optimizes GPU utilization by dynamically batching embedding computations and prioritizing the most promising search paths. The two-level search structure ensures fast and precise retrieval even on low-resource systems.

4. Multiple Backend Support

It supports HNSW (Hierarchical Navigable Small World) and DiskANN backends, allowing users to balance between speed, memory, and performance. HNSW is perfect for smaller setups while DiskANN offers superior performance for large-scale use.

RAG on Everything: Beyond Document Search

LEANN’s capabilities go far beyond simple document retrieval. It enables Retrieval-Augmented Generation (RAG) on virtually any data source whether static or live. Here are some standout use cases:

- Personal Data Management:

Search across PDFs, text files, markdown notes, and technical papers directly from your device. - Email RAG:

Index and analyze your entire Apple Mail inbox locally, turning emails into a searchable knowledge base. - Browser History Analysis:

Search your entire Chrome browsing history to rediscover articles, research or resources. - Chat Archives:

Query old ChatGPT, Claude, iMessage, or WeChat conversations to recall insights and decisions. - Live Data Integration:

Through MCP (Model Context Protocol), LEANN connects to platforms like Slack and Twitter offering real-time semantic search on live data streams. - Codebase Integration:

Developers can integrate LEANN with Claude Code to enable semantic code search, AST-aware chunking and context-aware debugging directly within their IDEs.

This makes LEANN not just a database but a universal RAG engine capable of powering both personal and enterprise-level AI assistants.

Easy Setup and Developer-Friendly API

It is designed with simplicity in mind. Developers can set up a working RAG system in just a few lines of code:

from leann import LeannBuilder, LeannSearcher, LeannChat

from pathlib import Path

INDEX_PATH = str(Path("./").resolve() / "demo.leann")

# Build an index

builder = LeannBuilder(backend_name="hnsw")

builder.add_text("LEANN saves 97% storage compared to traditional vector databases.")

builder.build_index(INDEX_PATH)

# Search

searcher = LeannSearcher(INDEX_PATH)

results = searcher.search("vector database efficiency", top_k=1)

# Chat with your data

chat = LeannChat(INDEX_PATH, llm_config={"type": "hf", "model": "Qwen/Qwen3-0.6B"})

response = chat.ask("How much storage does LEANN save?")

The Command-Line Interface (CLI) is equally straightforward, allowing users to build, search and chat with their data interactively. This simplicity makes LEANN ideal for both beginners and enterprise AI engineers.

LEANN vs. Traditional Vector Databases

| Feature | Traditional Vector DB | LEANN |

| Storage Requirement | Extremely high | 97% less |

| Privacy | Cloud-dependent | 100% local |

| Speed | Fast but resource-heavy | Optimized for low-resource |

| Accuracy | High | Equal or better |

| Setup Complexity | Moderate to high | Easy and scriptable |

| Portability | Limited | Fully portable |

The Vision Behind LEANN

LEANN is not just a tool — it’s part of a broader movement to democratize personal AI. The project aims to make advanced RAG systems accessible to everyone, regardless of hardware limitations. By turning any laptop into a self-contained AI assistant, LEANN eliminates the barriers of cost, cloud dependency and privacy concerns.

Its open-source nature also encourages collaboration. With contributions from AI researchers, developers and data scientists, LEANN continues to evolve rapidly, integrating new platforms and optimizing performance across multiple environments.

Conclusion

LEANN represents a pivotal innovation in the world of vector databases and retrieval systems. It challenges the assumption that high-performance AI requires high storage, cloud resources, or complex infrastructure. By introducing graph-based selective recomputation and offline-first design, it achieves extraordinary storage efficiency while ensuring total privacy and flexibility.

For researchers, developers and everyday AI users, it offers a clear path toward lightweight, personal and privacy-first artificial intelligence. Whether you’re managing academic papers, business data or millions of chat logs, LEANN gives you the power to RAG everything – securely, locally and intelligently.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- Reducing Hallucinations in Vision-Language Models: A Step Forward with VisAlign

- DeepEyesV2: The Next Leap Toward Agentic Multimodal Intelligence

- Agent-o-rama: The End-to-End Platform Transforming LLM Agent Development

- CALM: Revolutionizing Large Language Models with Continuous Autoregressive Learning

- Supervised Reinforcement Learning: A New Era of Step-Wise Reasoning in AI

2 thoughts on “LEANN: The Bright Future of Lightweight, Private, and Scalable Vector Databases”