Artificial Intelligence is evolving at lightning speed and inclusionAI’s Ling-1T is one of the most exciting innovations leading the charge. Built on the advanced Ling 2.0 architecture, Ling-1T is a trillion-parameter model designed to combine incredible reasoning power, speed and scalability in one open-source system.

Image Source : Hugging Face

Unlike many AI models that simply get “bigger,” Ling-1T focuses on getting smarter using intelligent efficiency to rival even top proprietary systems like GPT-5 and Gemini-2.5-Pro. It’s a huge leap forward for developers, researchers and anyone who wants cutting-edge AI capabilities without the limitations of closed platforms.

What Makes Ling-1T Different?

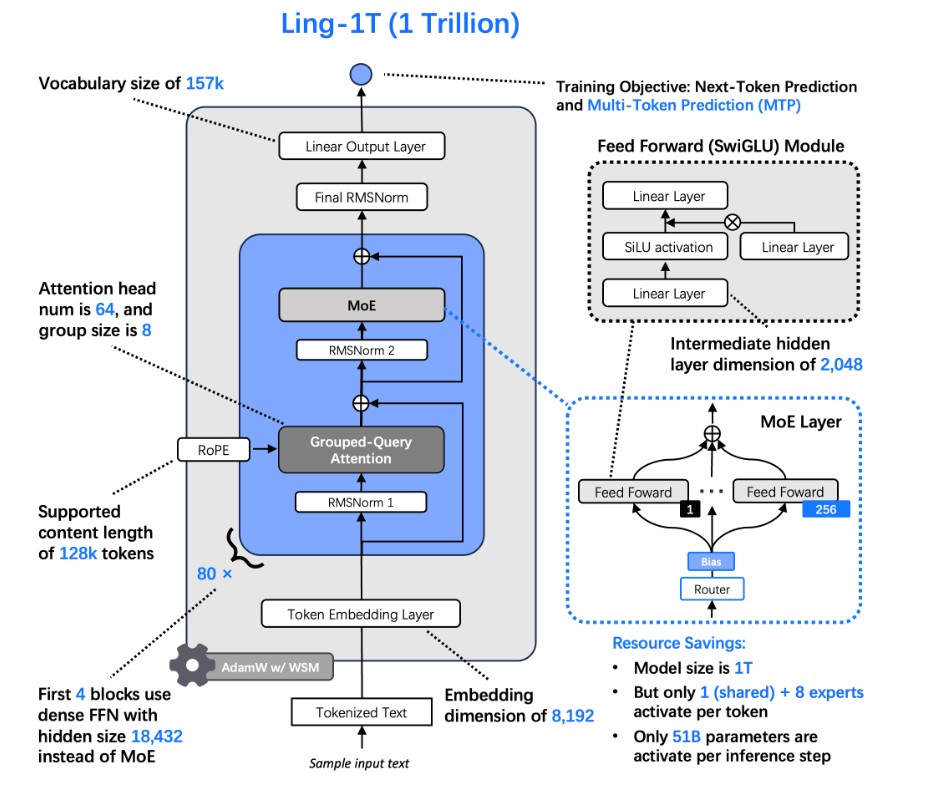

Most large language models use enormous amounts of computing power making them expensive and difficult to scale. Ling-1T solves this problem with its Mixture-of-Experts (MoE) architecture.

Instead of activating all 1 trillion parameters at once, Ling-1T selectively uses only the most relevant ones around 50 billion per token. This approach means less compute power, lower costs and faster responses – all without compromising quality.

It’s trained on more than 20 trillion tokens of carefully curated data and can handle an extended context length of up to 128K tokens. That means it can process very long documents, detailed instructions or multi-step reasoning tasks seamlessly.

The Power of Ling 2.0 Architecture

The core of Ling-1T lies in its Ling 2.0 architecture, designed around the Ling Scaling Law, a framework for efficiently managing trillion-parameter models.

Here’s how it stands out:

- Selective Activation: Only 1/32 of parameters are active per token, saving energy and boosting speed.

- Multi-Token Prediction (MTP): Helps the model understand relationships between multiple ideas at once.

- Improved Normalization and Routing: Keeps training stable and reasoning more consistent.

- FP8 Mixed-Precision Training: Speeds up training while keeping accuracy high.

Ling-1T performs at the level of massive AI systems while remaining cost-effective and computationally efficient.

Training at an Unprecedented Scale

Training Ling-1T was a massive engineering challenge and inclusionAI met it with a dataset of over 20 trillion reasoning-focused tokens.

The model was trained using a Warmup–Stable–Merge (WSM) process to ensure stable learning and better generalization. During this process, it gradually improved its understanding of logic, mathematics and natural language.

Additionally, the inclusionAI team used chain-of-thought datasets, teaching the model to think step-by-step like a human. This gives Ling-1T a strong advantage in reasoning and problem-solving tasks, especially in areas that require multi-step logic or code generation.

Smarter Post-Training with Evo-CoT and LPO

After the main training, Ling-1T went through two advanced fine-tuning stages that further enhanced its intelligence:

1. Evo-CoT (Evolutionary Chain-of-Thought)

This stage strengthens the model’s ability to reason logically and adaptively. Evo-CoT helps Ling-1T produce more accurate and coherent explanations improving how it tackles complex reasoning questions.

2. LPO (Linguistics-Unit Policy Optimization)

Instead of training token-by-token, LPO optimizes at the sentence level, treating each sentence as a meaningful unit. This makes Ling-1T’s responses more natural, stable and contextually aware particularly during long, multi-turn conversations.

Together, these methods make Ling-1T feel much more human-like in its reasoning and expression.

Real-World Performance

When tested across industry benchmarks, Ling-1T consistently delivered top-tier results. It excels in:

- Mathematical and logical reasoning

- Complex coding and front-end development

- Multi-language content creation

- Visual reasoning and UI/UX code generation

On ArtifactsBench, which measures visual understanding and design reasoning, Ling-1T ranked #1 among open-source models. This means it doesn’t just write code, it can design beautiful and functional front-end layouts intelligently.

Developer-Friendly and Easy to Integrate

Ling-1T was built with developers in mind. It integrates smoothly with popular tools like Hugging Face Transformers, OpenAI-compatible APIs and vLLM for high-speed inference.

Here’s a simple example of how to use Ling-1T with the ZenMux API:

from openai import OpenAI

client = OpenAI(base_url="https://zenmux.ai/api/v1", api_key="<ZENMUX_API_KEY>")

completion = client.chat.completions.create(

model="inclusionai/ling-1t",

messages=[{"role": "user", "content": "Explain efficient reasoning in AI."}]

)

print(completion.choices[0].message.content)

For larger deployments, Ling-1T supports distributed serving via SGLang making it perfect for enterprise or research-scale applications.

Current Challenges and Future Roadmap

Even with its impressive achievements, Ling-1T still faces a few challenges. These include:

- High attention cost for extremely long contexts

- Limited memory for multi-turn reasoning

- Occasional role mix-ups in complex dialogues

However, inclusionAI has already announced that the next generation – Ling-2T, will address these issues with hybrid attention systems, enhanced memory modules and agentic reasoning capabilities.

Open-Source and Accessible for All

One of the best things about Ling-1T is that it’s fully open-source under the MIT License. That means anyone – developers, researchers or businesses can use, modify or fine-tune it freely.

You can access the model here:

Conclusion

The release of Ling-1T marks a major milestone in open-source AI. It’s not just about having one trillion parameters , it’s about using them intelligently.

By combining powerful architecture, efficient training, and advanced reasoning optimization, inclusionAI has created a model that’s both accessible and cutting-edge. Ling-1T proves that open-source AI can compete and even lead – the next generation of intelligent systems.

As we look ahead, it’s clear that the future of AI isn’t just about bigger models, it’s about smarter, faster and more efficient ones. And Ling-1T is showing the world exactly what that future looks like.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- OpenAI’s AgentKit: Transforming How Developers Build and Deploy AI Agents

- Build a Large Language Model From Scratch: A Step-by-Step Guide to Understanding and Creating LLMs

- The Little Book of Deep Learning – A Complete Summary and Chapter-Wise Overview

- DeepEval: The Ultimate LLM Evaluation Framework for AI Developers

- Paper2Agent: Revolutionizing Research Papers into Powerful Interactive AI Agents

5 thoughts on “Ling-1T by inclusionAI: The Future of Smarter, Faster and More Efficient AI Models”