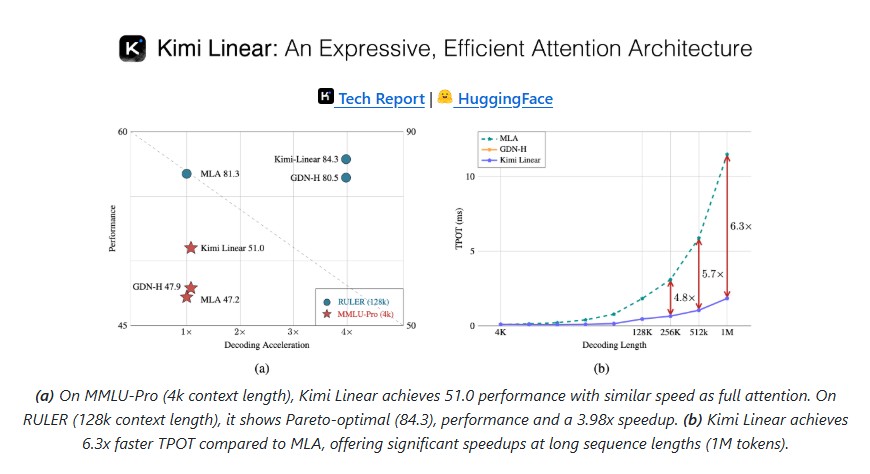

Kimi Linear: The Future of Efficient Attention in Large Language Models

The rapid evolution of large language models (LLMs) has unlocked new capabilities in natural language understanding, reasoning, coding and multimodal tasks. However, as models grow more advanced, one major challenge persists: computational efficiency. Traditional full-attention architectures struggle to scale efficiently, especially when handling long context windows and real-time inference workloads. The increasing demand for agent-like … Read more