The world of artificial intelligence is rapidly evolving and self-supervised learning has become a driving force behind breakthroughs in computer vision and 3D scene understanding. Traditional supervised learning relies heavily on labeled datasets which are expensive and time-consuming to produce. Self-supervised learning, on the other hand, extracts meaningful patterns without manual labels allowing models to scale faster and learn more naturally from raw data.

However, a major challenge remains: most self-supervised models only focus on a single data modality such as images or 3D point clouds. This isolated approach limits the richness of learned representations just like humans would struggle to recognize the world fully if they relied only on sight or touch.

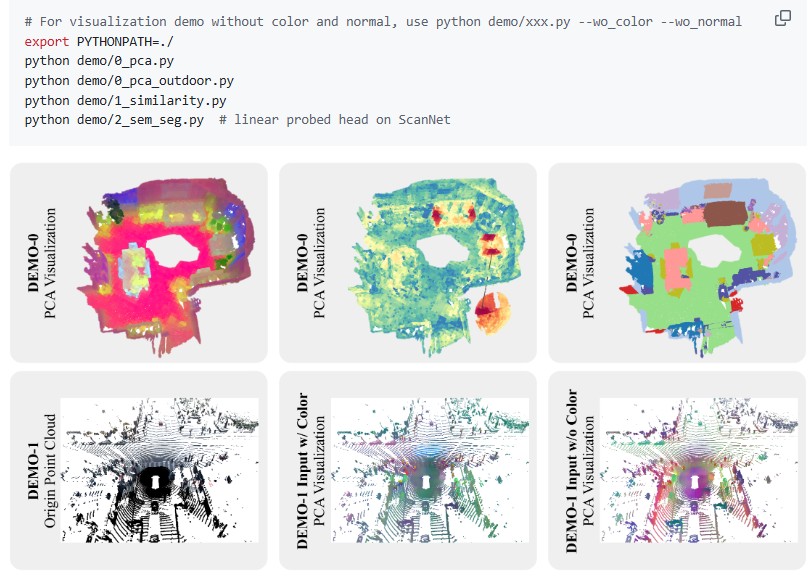

A groundbreaking research framework, Concerto, addresses this problem by combining 2D image learning with 3D point cloud learning into a single self-supervised pipeline. Inspired by human multisensory perception, Concerto delivers powerful spatial understanding outperforming existing state-of-the-art (SOTA) 2D and 3D self-supervised models in multiple benchmarks.

In this article, we explain what Concerto is, how it works and why it represents a leap forward for AI vision systems, robotics, AR/VR, autonomous vehicles and foundation models of the future.

What Is Concerto?

Concerto is a joint 2D-3D self-supervised learning framework designed to build superior spatial representations without requiring labeled data. Its core idea mirrors how humans learn to understand objects and environments through multiple sensory inputs – seeing, touching and interacting.

Instead of training vision and 3D models separately, Concerto trains them together so that each guides and enhances the other. This results in representations that capture both:

- Fine-grained geometric structure (from 3D point clouds)

- Rich texture and semantic information (from 2D images)

The result is a model that understands the physical world far more deeply than models trained in isolation.

Why Single-Modal Learning Falls Short

Traditional self-supervised methods like DINOv2 for images and Sonata for point clouds have achieved impressive results. Yet, research reveals that each modality learns different cues and simply combining the outputs later is not enough.

Concerto demonstrates that joint learning during training produces richer, more unified spatial features than either modality can achieve alone or via naive feature fusion.

How Concerto Works: Key Innovations

Concerto blends two major learning components:

1. Intra-Modal Self-Distillation (3D Focus)

Using the Sonata architecture, Concerto trains a point cloud encoder to improve itself by matching predictions from a momentum-updated teacher model. This allows the network to build deep geometric understanding from raw point clouds.

2. Cross-Modal Joint Embedding Prediction

This is where Concerto shines. The model learns to align 3D point features with corresponding 2D image patch features. It uses camera parameters to map 3D points to 2D pixels allowing each modality to reinforce the other.

This process simulates how humans form concepts. For example, seeing an object can evoke its weight or shape and touching it can remind you of its color or visual texture.

Performance and Benchmarks

Concerto achieves state-of-the-art results across multiple tasks, including semantic segmentation, instance segmentation and video-based spatial reconstruction.

Key highlights include:

- 77.3% mIoU on ScanNet with linear probing — surpassing both DINOv2 and Sonata independently

- 80.7% mIoU with full fine-tuning — a new benchmark in 3D segmentation

- Better performance than feature concatenation of 2D and 3D models, proving cross-modal synergy

- Strong data efficiency — excels even with limited training scenes

- Video-lifted data support for real-time spatial understanding

Concerto even shows early ability to connect to language spaces via CLIP alignment, moving toward open-world semantic perception.

Why Concerto Matters

Concerto’s breakthroughs have major implications across industries:

| Industry | Impact |

| Robotics | More robust 3D perception for grasping, navigation, and mapping |

| Autonomous Vehicles | Better scene understanding and obstacle recognition |

| AR/VR & Metaverse | Real-world scene grounding and 3D semantic awareness |

| Construction & Real Estate | Accurate indoor scene modeling |

| AI Foundation Models | Step toward multimodal world-modeling |

By tapping into the power of unified multimodal learning, Concerto brings AI one step closer to human-like scene understanding.

Future Directions

The research behind Concerto points to exciting future pathways including:

- Fully joint training of both image and point cloud encoders

- Deeper language-grounded 3D understanding

- Unified frameworks across indoor, outdoor and video-based domains

This is just the beginning of multimodal perception for AI systems.

Conclusion

Concerto represents a major milestone in self-supervised spatial learning. By uniting 2D and 3D perception into a single efficient framework, it demonstrates that multisensory synergy unlocks richer, more generalizable world understanding. As AI continues to evolve toward foundation models capable of reasoning about the physical world, Concerto marks an important step forward paving the way for smarter robots, immersive AR/VR systems, autonomous vehicles and universal perception engines.

Follow us for cutting-edge updates in AI & explore the world of LLMs, deep learning, NLP and AI agents with us.

Related Reads

- Pico-Banana-400K: The Breakthrough Dataset Advancing Text-Guided Image Editing

- PokeeResearch: Advancing Deep Research with AI and Web-Integrated Intelligence

- DeepAgent: A New Era of General AI Reasoning and Scalable Tool-Use Intelligence

- Generative AI for Beginners: A Complete Guide to Microsoft’s Free Course

- Open WebUI: The Most Powerful Self-Hosted AI Platform for Local and Private LLMs

1 thought on “Concerto: How Joint 2D-3D Self-Supervised Learning Is Redefining Spatial Intelligence”